34. Optimal Growth I: The Stochastic Optimal Growth Model#

Contents

34.1. Overview#

In this lecture we’re going to study a simple optimal growth model with one agent.

The model is a version of the standard one sector infinite horizon growth model studied in

The technique we use to solve the model is dynamic programming.

Our treatment of dynamic programming follows on from earlier treatments in our lectures on shortest paths and job search.

We’ll discuss some of the technical details of dynamic programming as we go along.

34.2. The Model#

Consider an agent who owns an amount

This output can either be consumed or invested.

When the good is invested it is transformed one-for-one into capital.

The resulting capital stock, denoted here by

Production is stochastic, in that it also depends on a shock

Next period output is

where

The resource constraint is

and all variables are required to be nonnegative.

34.2.1. Assumptions and Comments#

In what follows,

The sequence

The common distribution of each

The production function

Depreciation of capital is not made explicit but can be incorporated into the production function.

While many other treatments of the stochastic growth model use

This will allow us to treat a stochastic model while maintaining only one state variable.

We consider alternative states and timing specifications in some of our other lectures.

34.2.2. Optimization#

Taking

subject to

where

In (34.3) we are assuming that the resource constraint (34.1) holds with equality — which is reasonable because

In summary, the agent’s aim is to select a path

nonnegative,

feasible in the sense of (34.1),

optimal, in the sense that it maximizes (34.2) relative to all other feasible consumption sequences, and

adapted, in the sense that the action

In the present context

34.2.3. The Policy Function Approach#

One way to think about solving this problem is to look for the best policy function.

A policy function is a map from past and present observables into current action.

We’ll be particularly interested in Markov policies, which are maps from the current state

For dynamic programming problems such as this one (in fact for any Markov decision process), the optimal policy is always a Markov policy.

In other words, the current state

This is quite intuitive but if you wish you can find proofs in texts such as [SLP89] (section 4.1).

Hereafter we focus on finding the best Markov policy.

In our context, a Markov policy is a function

In what follows, we will call

In other words, a feasible consumption policy is a Markov policy that respects the resource constraint.

The set of all feasible consumption policies will be denoted by

Each

This is the time path for output when we choose and stick with the policy

We insert this process into the objective function to get

This is the total expected present value of following policy

The aim is to select a policy that makes this number as large as possible.

The next section covers these ideas more formally.

34.2.4. Optimality#

The policy value function

when

In other words, it is the lifetime value of following policy

The value function is then defined as

The value function gives the maximal value that can be obtained from state

A policy

34.2.5. The Bellman Equation#

With our assumptions on utility and production function, the value function as defined in (34.8) also satisfies a Bellman equation.

For this problem, the Bellman equation takes the form

This is a functional equation in

The term

the state is

consumption is set to

As shown in EDTC, theorem 10.1.11 and a range of other texts.

The value function

satisfies the Bellman equation

In other words, (34.9) holds when

The intuition is that maximal value from a given state can be obtained by optimally trading off

current reward from a given action, vs

expected discounted future value of the state resulting from that action

The Bellman equation is important because it gives us more information about the value function.

It also suggests a way of computing the value function, which we discuss below.

34.2.6. Greedy policies#

The primary importance of the value function is that we can use it to compute optimal policies.

The details are as follows.

Given a continuous function

for every

In other words,

In our setting, we have the following key result

A feasible consumption policy is optimal if and only if it is

-greedy

The intuition is similar to the intuition for the Bellman equation, which was provided after (34.9).

See, for example, theorem 10.1.11 of EDTC.

Hence, once we have a good approximation to

The advantage is that we are now solving a much lower dimensional optimization problem.

34.2.7. The Bellman Operator#

How, then, should we compute the value function?

One way is to use the so-called Bellman operator.

(An operator is a map that sends functions into functions)

The Bellman operator is denoted by

In other words,

By construction, the set of solutions to the Bellman equation (34.9) exactly coincides with the set of fixed points of

For example, if

which says precisely that

It follows that

34.2.8. Review of Theoretical Results#

One can also show that

See EDTC, lemma 10.1.18.

Hence it has exactly one fixed point in this set, which we know is equal to the value function.

It follows that

The value function

Starting from any bounded and continuous

This iterative method is called value function iteration.

We also know that a feasible policy is optimal if and only if it is

It’s not too hard to show that a

Hence at least one optimal policy exists.

Our problem now is how to compute it.

34.2.9. Unbounded Utility#

The results stated above assume that the utility function is bounded.

In practice economists often work with unbounded utility functions — and so will we.

In the unbounded setting, various optimality theories exist.

Unfortunately, they tend to be case specific, as opposed to valid for a large range of applications.

Nevertheless, their main conclusions are usually in line with those stated for the bounded case just above (as long as we drop the word “bounded”).

Consult, for example, section 12.2 of EDTC, [Kam12] or [MdRV10].

34.3. Computation#

Let’s now look at computing the value function and the optimal policy.

34.3.1. Fitted Value Iteration#

The first step is to compute the value function by value function iteration.

In theory, the algorithm is as follows

Begin with a function

Solving (34.11), obtain the function

Unless some stopping condition is satisfied, set

This generates the sequence

However, there is a problem we must confront before we implement this procedure: The iterates can neither be calculated exactly nor stored on a computer.

To see the issue, consider (34.11).

Even if

Clearly this is impossible.

What we will do instead is use fitted value function iteration.

The procedure is to record the value of the function

More precisely, the algorithm will be

Begin with an array of values

Build a function

Obtain and record the value

Unless some stopping condition is satisfied, set

How should we go about step 2?

This is a problem of function approximation, and there are many ways to approach it.

What’s important here is that the function approximation scheme must not only produce a good approximation to

One good choice from both respects is continuous piecewise linear interpolation (see this paper for further discussion).

The next figure illustrates piecewise linear interpolation of an arbitrary function on grid points

using LinearAlgebra, Statistics

using LaTeXStrings, Plots, Interpolations, NLsolve, Optim, Random

using Optim: maximum, maximizer

f(x) = 2 .* cos.(6x) .+ sin.(14x) .+ 2.5

c_grid = 0:0.2:1

f_grid = range(0, 1, length = 150)

Af = LinearInterpolation(c_grid, f(c_grid))

plt = plot(xlim = (0, 1), ylim = (0, 6))

plot!(plt, f, f_grid, color = :blue, lw = 2, alpha = 0.8,

label = "true function")

plot!(plt, f_grid, Af.(f_grid), color = :green, lw = 2, alpha = 0.8,

label = "linear approximation")

plot!(plt, f, c_grid, seriestype = :sticks, linestyle = :dash, linewidth = 2,

alpha = 0.5,

label = "")

plot!(plt, legend = :top)

Another advantage of piecewise linear interpolation is that it preserves useful shape properties such as monotonicity and concavity / convexity.

34.3.2. The Bellman Operator#

Here’s a function that implements the Bellman operator using linear interpolation

function T(w; p, tol = 1e-10)

(; beta, u, f, Xi, y) = p # unpack parameters

w_func = LinearInterpolation(y, w)

Tw = similar(w)

sigma = similar(w)

for (i, y_val) in enumerate(y)

# solve maximization for each point in y, using y itself as initial condition.

results = maximize(c -> u(c; p) +

beta * mean(w_func.(f(y_val - c; p) .* Xi)),

tol, y_val)

Tw[i] = maximum(results)

sigma[i] = maximizer(results)

end

return (; w = Tw, sigma) # returns named tuple of results

end

T (generic function with 1 method)

Notice that the expectation in (34.11) is computed via Monte Carlo, using the approximation

where

Monte Carlo is not always the most efficient way to compute integrals numerically but it does have some theoretical advantages in the present setting.

(For example, it preserves the contraction mapping property of the Bellman operator — see, e.g., [PalS13])

34.3.3. An Example#

Let’s test out our operator when

As is well-known (see [LS18], section 3.1.2), for this particular problem an exact analytical solution is available, with

The optimal consumption policy is

Let’s code this up now so we can test against it below

In addition to the model parameters, we need a grid and some shock draws for Monte Carlo integration.

Random.seed!(42) # for reproducible results

u(c; p) = log(c) # utility

f(k; p) = k^p.alpha # deterministic part of production function

function OptimalGrowthModel(; alpha = 0.4, beta = 0.96, mu = 0.0, s = 0.1,

u = u, f = f, # defaults defined above

y = range(1e-5, 4.0, length = 200), # grid on y

Xi = exp.(mu .+ s * randn(250)))

return (; alpha, beta, mu, s, u, f, y, Xi)

end # named tuples defaults

# True value and policy function

function v_star(y; p)

(; alpha, mu, beta) = p

c1 = log(1 - alpha * beta) / (1 - beta)

c2 = (mu + alpha * log(alpha * beta)) / (1 - alpha)

c3 = 1 / (1 - beta)

c4 = 1 / (1 - alpha * beta)

return c1 + c2 * (c3 - c4) + c4 * log(y)

end

c_star(y; p) = (1 - p.alpha * p.beta) * y

c_star (generic function with 1 method)

34.3.4. A First Test#

To test our code, we want to see if we can replicate the analytical solution numerically, using fitted value function iteration.

Now let’s do some tests.

As one preliminary test, let’s see what happens when we apply our Bellman operator to the exact solution

In theory, the resulting function should again be

In practice we expect some small numerical error.

p = OptimalGrowthModel() # use all default parameters from named tuple

w_star = v_star.(p.y; p) # evaluate closed form value along grid

w = T(w_star; p).w # evaluate operator, access Tw results

plt = plot(ylim = (-35, -24))

plot!(plt, p.y, w, linewidth = 2, alpha = 0.6, label = L"T(v^*)")

plot!(plt, p.y, w_star, linewidth = 2, alpha = 0.6, label = L"v^*")

plot!(plt, legend = :bottomright)

The two functions are essentially indistinguishable, so we are off to a good start.

Now let’s have a look at iterating with the Bellman operator, starting off from an arbitrary initial condition.

The initial condition we’ll start with is

w = 5 * log.(p.y) # An initial condition -- fairly arbitrary

n = 35

plot(xlim = (extrema(p.y)), ylim = (-50, 10))

lb = "initial condition"

plt = plot(p.y, w, color = :black, linewidth = 2, alpha = 0.8, label = lb)

for i in 1:n

w = T(w; p).w

plot!(p.y, w, color = RGBA(i / n, 0, 1 - i / n, 0.8), linewidth = 2,

alpha = 0.6,

label = "")

end

lb = "true value function"

plot!(plt, p.y, v_star.(p.y; p), color = :black, linewidth = 2, alpha = 0.8,

label = lb)

plot!(plt, legend = :bottomright)

The figure shows

the first 36 functions generated by the fitted value function iteration algorithm, with hotter colors given to higher iterates

the true value function

The sequence of iterates converges towards

We are clearly getting closer.

We can write a function that computes the exact fixed point

function solve_optgrowth(initial_w; p, iterations = 500, m = 3,

show_trace = false)

results = fixedpoint(w -> T(w; p).w, initial_w; iterations, m, show_trace) # Anderson iteration

v_star = results.zero

sigma = T(results.zero; p).sigma

return (; v_star, sigma, results)

end

solve_optgrowth (generic function with 1 method)

We can check our result by plotting it against the true value

initial_w = 5 * log.(p.y)

sol = solve_optgrowth(initial_w; p)

v_star_approx = sol.v_star

println("Converged in $(sol.results.iterations) to an ||residuals||_∞ of $(sol.results.residual_norm)")

plt = plot(ylim = (-35, -24))

plot!(plt, p.y, v_star_approx, linewidth = 2, alpha = 0.6,

label = "approximate value function")

plot!(plt, p.y, v_star.(p.y; p), linewidth = 2, alpha = 0.6,

label = "true value function")

plot!(plt, legend = :bottomright)

Converged in 15 to an ||residuals||_∞ of 2.179099567456433e-9

The figure shows that we are pretty much on the money

Note that this converges in fewer than the 36 iterations printed above because it is using Anderson iteration - where the

34.3.5. The Policy Function#

To compute an approximate optimal policy, we take the approximate value function we just calculated and then compute the corresponding greedy policy.

The next figure compares the result to the exact solution, which, as mentioned

above, is

plt = plot(p.y, T(v_star_approx; p).sigma, lw = 2, alpha = 0.6,

label = "approximate policy function")

plot!(plt, p.y, c_star.(p.y; p), lw = 2, alpha = 0.6,

label = "true policy function")

plot!(plt, legend = :bottomright)

The figure shows that we’ve done a good job in this instance of approximating the true policy.

34.4. Exercises#

34.4.1. Exercise 1#

Once an optimal consumption policy

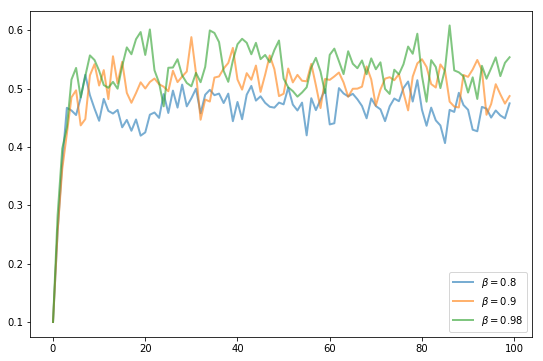

The next figure shows a simulation of 100 elements of this sequence for three different discount factors (and hence three different policies).

In each sequence, the initial condition is

The discount factors are discount_factors = (0.8, 0.9, 0.98).

We have also dialed down the shocks a bit.

p = OptimalGrowthModel(s = 0.05)

p.Xi

Show code cell output

250-element Vector{Float64}:

0.9819961668245721

1.0126664087134005

0.9843739746850739

0.9845578517434581

1.0416597330713688

1.0241232892126522

0.9579326855331085

0.9291692140795736

0.8996794537879703

1.0021914808932793

0.9595731512645318

1.0429095238242871

1.0219313258836822

⋮

1.0013570363566526

0.936028836092597

0.9944175678826758

0.9775640668429453

1.022229941967392

1.028686047499314

0.9341510619913468

0.9070123342340449

0.9489873846557617

1.0177798574430181

0.9897225222212319

0.9845828858353305

Otherwise, the parameters and primitives are the same as the log linear model discussed earlier in the lecture.

Notice that more patient agents typically have higher wealth.

Replicate the figure modulo randomness.

34.5. Solutions#

34.5.1. Exercise 1#

Here’s one solution (assuming as usual that you’ve executed everything above)

function simulate_og(sigma, p, y0, ts_length)

y = zeros(ts_length)

y[1] = y0

for t in 1:(ts_length - 1)

y[t + 1] = (y[t] - sigma(y[t]))^p.alpha * exp(p.mu + p.s * randn())

end

return y

end

beta_vals = [0.9 0.94 0.98]

ts_length = 100

y0 = 0.1

plt = plot()

for beta in beta_vals

p = OptimalGrowthModel(; beta) # change beta from default

initial_w = 5 * log.(p.y)

sol = solve_optgrowth(initial_w; p)

sigma_func = LinearInterpolation(p.y, sol.sigma)

y = simulate_og(sigma_func, p, y0, ts_length)

plot!(plt, 0:(ts_length - 1), y, lw = 2, alpha = 0.6,

label = L"\beta = %$beta")

end

plt